Every pilgrimage in the mystic world of artificial neural networks & deep learning starts from Perceptron !!

Perceptron is the simplest type of artificial neural network. It can be used to solve two-class classification problems.

But, before you take the first step in the amazing world of neural networks, a big shout out to Sebastian Raschka, Jason Brownlee & everyone else for sharing their learnings with the world !!

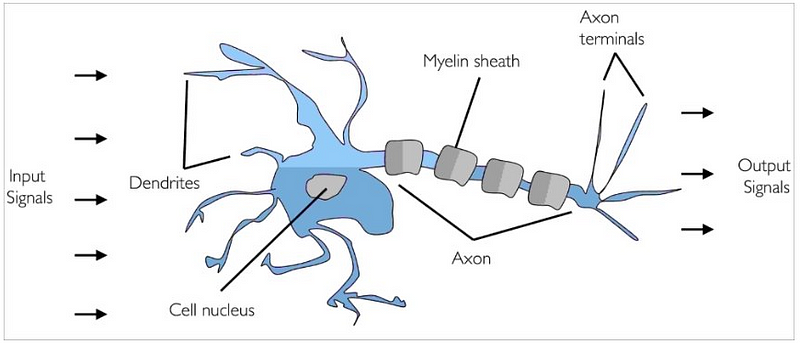

How does artificial neuron work?

Working of artificial neuron is based on how a biological neuron work. A biological neuron accepts input signals via its dendrites, which pass the electrical signal down to the cell body.

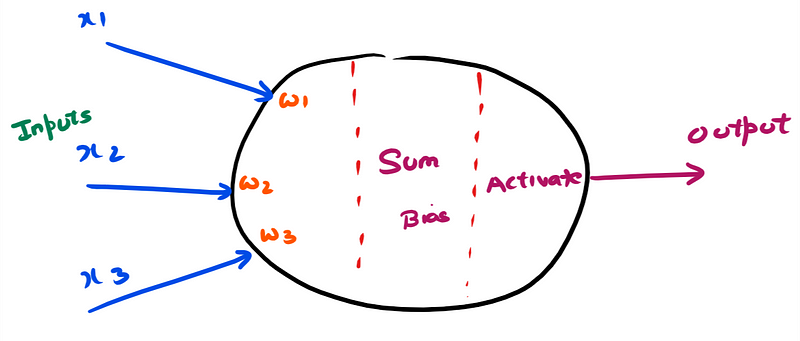

An artificial neuron works similarly

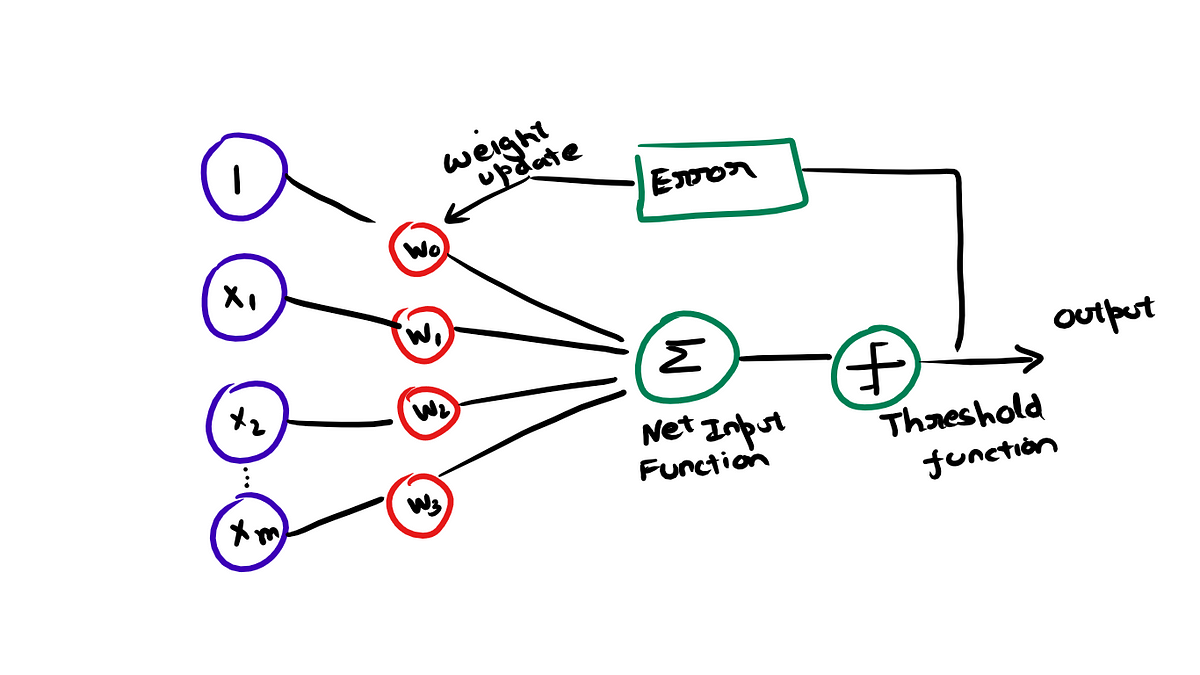

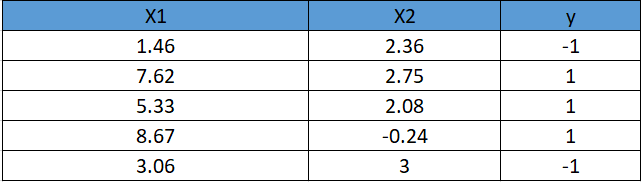

In an artificial neuron there are three main components.

1. Input Signal

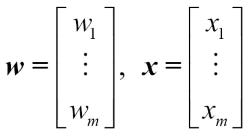

When input data comes in, it get multiplied by the weights assigned to that input value.

2. Sum

The weighted input is then summed up. Here a bias value or bias unit is also added to the sum as an offset . Generally initial value of bias unit is taken as 1, it is an additional parameter which is used to adjust the predicted output along with the weighted inputs towards desired output.

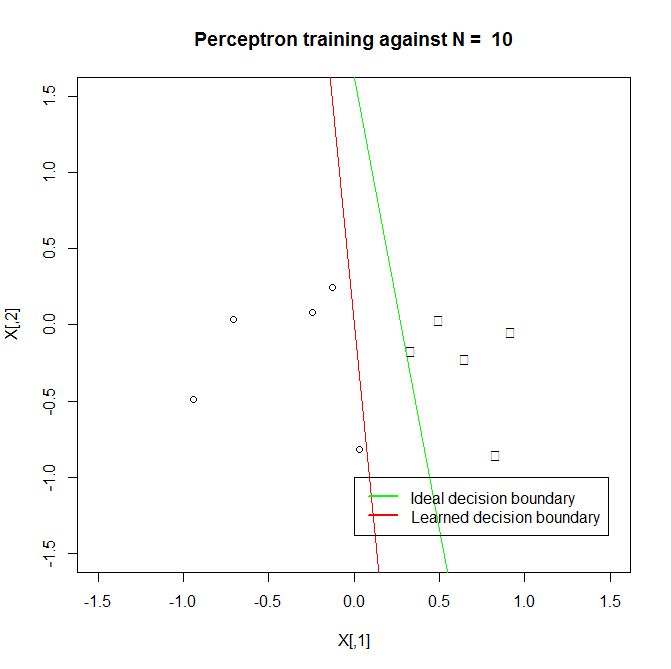

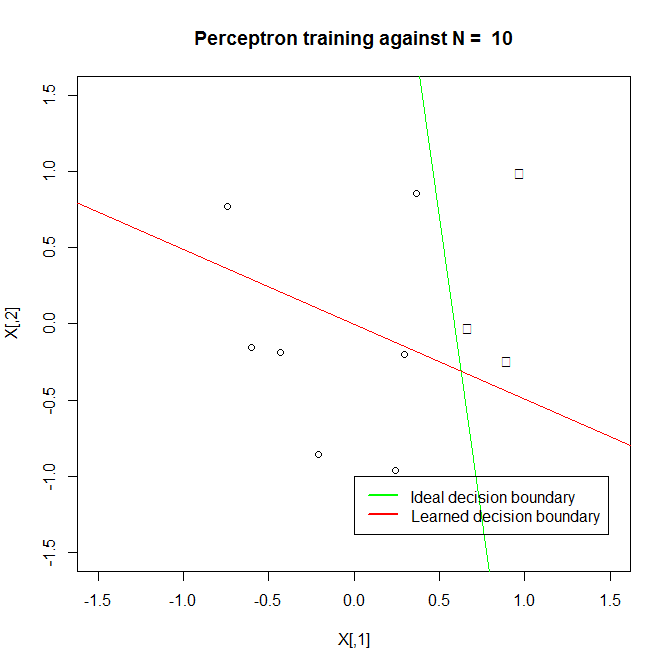

Note : Checkout the neural network learning comparison with & without bias unit below. Yeah, It is important to add bias unit.

3. Activation

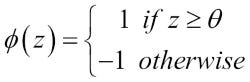

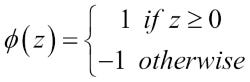

Now, the calculated signal is fed into a transfer function also called as a activation function. One such example is called as Unit step function.

If you want to learn how neural networks work, learn how Perceptron works.

What is a Perceptron ?

Perceptron is the simplest type of artificial neural network. It is inspired by information processing mechanism of a biological neuron.

Frank Rosenblatt proposed the first concept of perceptron learning rule in his paper The Perceptron: A Perceiving and Recognizing Automaton, F. Rosenblatt, Cornell Aeronautical Laboratory, 1957.

Rosenblatt proposed an algorithm that would automatically learn the optimal weight coefficient that are then multiplied with the input features in order to make the decision of whether a neuron fires or not.

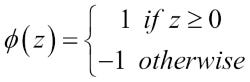

Perceptron Learning Rule:

- Initialize the weights to zero (0) or to a random number.

2. For every training sample do the following two steps.

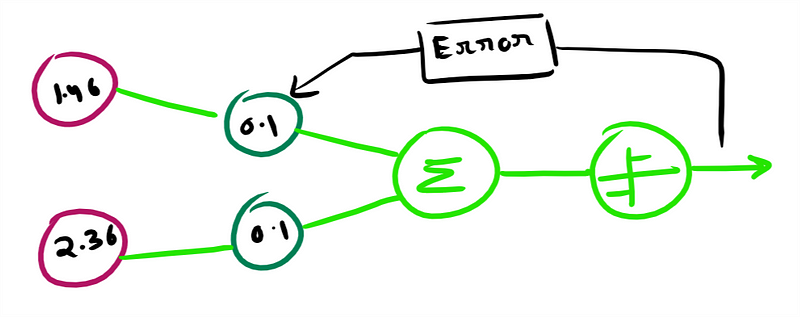

(a) Find the output value also called as predicted value y^(predicted output). using following decision function.

(b)Update weight values.

Lets understand with an example

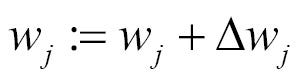

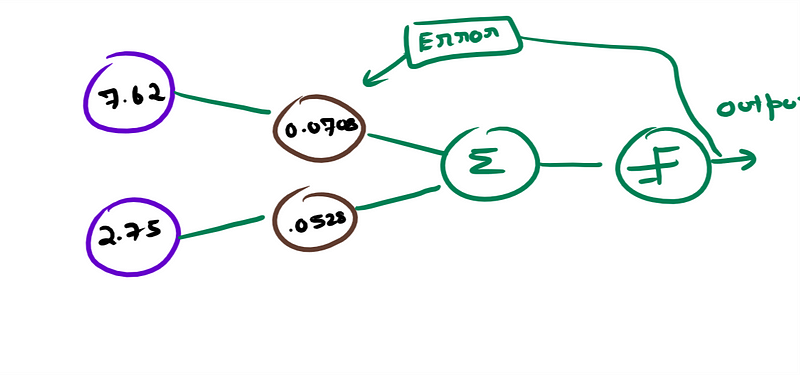

Suppose the training data set comprises of x= {x1 , x2} & y= target value or expected value or true class label. There are two output classes in the dataset (1,-1).

Let’s assume initial weights as 0. 1

Net input sum (Z)= x1*w1 + x2*w2 = 1.46 * 0.1 + 2.36 *0.1 = 0.382

Using transfer function (unit step function)

As net Z > 0, Predicted value y^ = 1

But according to the dataset expected prediction should be -1.

Magic happens : Weight updation

w(new) = w(current) + learning_rate * (expected — predicted) * x (input value)

Learning rate : A learning rate parameter controls how much the coefficients can change on each update, its value is generally initialized between (0.0 to 0.1)

Calculating new weights:(Assuming learning rate to be 0.01)

w1 (new) = 0.1 + 0.01* (-1–1)*1.46 = 0.0708

w2 (new)=0.1 + 0.01 *(-1–1)*2.36 = 0.0528

Next time,

Weights are updated & the process goes on. Stop the training once performance on the validation dataset starts to degrade.

Bias

Bias can be added as

Net input sum= sum (weight_i * x_i) + bias

&

bias (new)= bias(current) + learning_rate * (expected output — predicted output)

Where can we use Perceptron ?

In context of supervised learning and classification, perceptron algorithm could then be used to predict if a sample belongs to one class or other.

Bon Voyage for you Machine Learning journey…

If you have any comment or question then write it in the comment.

To see similar post, follow me on Medium & Linkedin.

Clap it! Share it! Follow Me!!

Join Discussion

6 Comments

Wow because this is really excellent work! Congrats and keep it up. Jessi Dieter Tolley

Way cool! Some very valid points! I appreciate you writing this post and the rest of the site is really good. Polly Giustino Burnard

This is one awesome article post. Really looking forward to read more. Ranna Neil Maze

Thanks so much for the article post. Really thank you! Really Cool. Harmonie Reid Vanni

Really appreciate you sharing this post. Really thank you! Awesome. Deirdre Derick Kurys

Hiya very cool blog!! Man .. Excellent .. Amazing .. Denise Alan Nevada

Your Comment

Leave a Reply Now